43 mengubah file dengan 2959 tambahan dan 0 penghapusan

+ 118

- 0

.gitignore

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

+ 201

- 0

LICENSE

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

+ 44

- 0

Pipfile

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

+ 477

- 0

Pipfile.lock

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

+ 465

- 0

README.md

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

+ 104

- 0

cnnlib/network.py

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

+ 81

- 0

cnnlib/recognition_object.py

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

+ 11

- 0

conf/captcha_config.json

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

+ 29

- 0

conf/sample_config.json

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

+ 60

- 0

conf/sample_config.md

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

+ 57

- 0

gen_sample_by_captcha.py

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

+ 95

- 0

log_ware.py

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

TEMPAT SAMPAH

readme_image/Cage1.jpg

TEMPAT SAMPAH

readme_image/Cage2.jpg

TEMPAT SAMPAH

readme_image/Kaptcha_2.png

TEMPAT SAMPAH

readme_image/Kaptcha_3.png

TEMPAT SAMPAH

readme_image/Kaptcha_5.png

TEMPAT SAMPAH

readme_image/SimpleCaptcha_1.jpg

TEMPAT SAMPAH

readme_image/SimpleCaptcha_2.jpg

TEMPAT SAMPAH

readme_image/SimpleCaptcha_3.jpg

TEMPAT SAMPAH

readme_image/SkewPassImage.jpg

TEMPAT SAMPAH

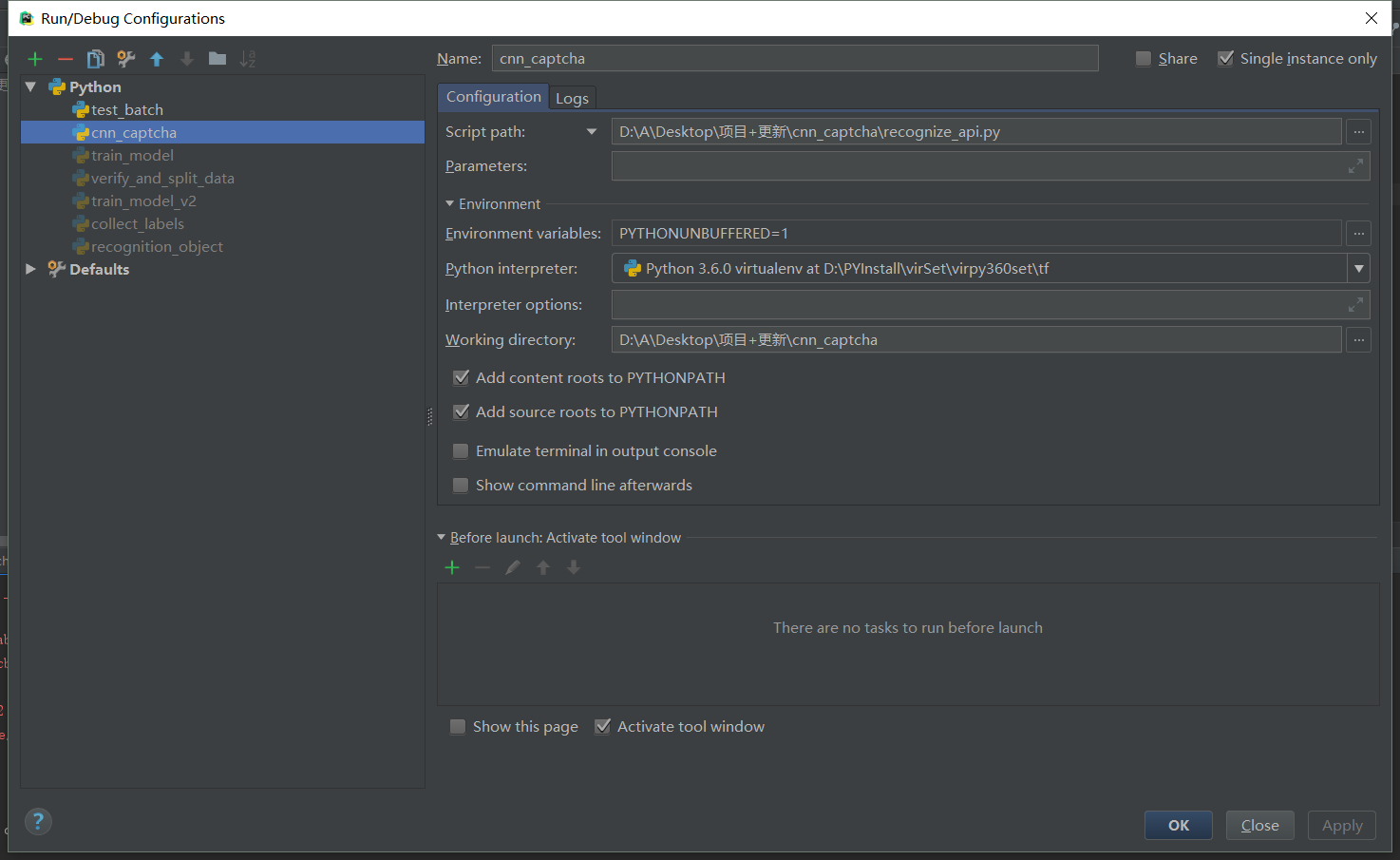

readme_image/bug_api启动失败.png

TEMPAT SAMPAH

readme_image/iCaptcha.jpg

TEMPAT SAMPAH

readme_image/jcaptcha1.jpg

TEMPAT SAMPAH

readme_image/jcaptcha2.jpg

TEMPAT SAMPAH

readme_image/jcaptcha3.jpg

TEMPAT SAMPAH

readme_image/patchca_1.png

TEMPAT SAMPAH

readme_image/py_Captcha-1.jpg

TEMPAT SAMPAH

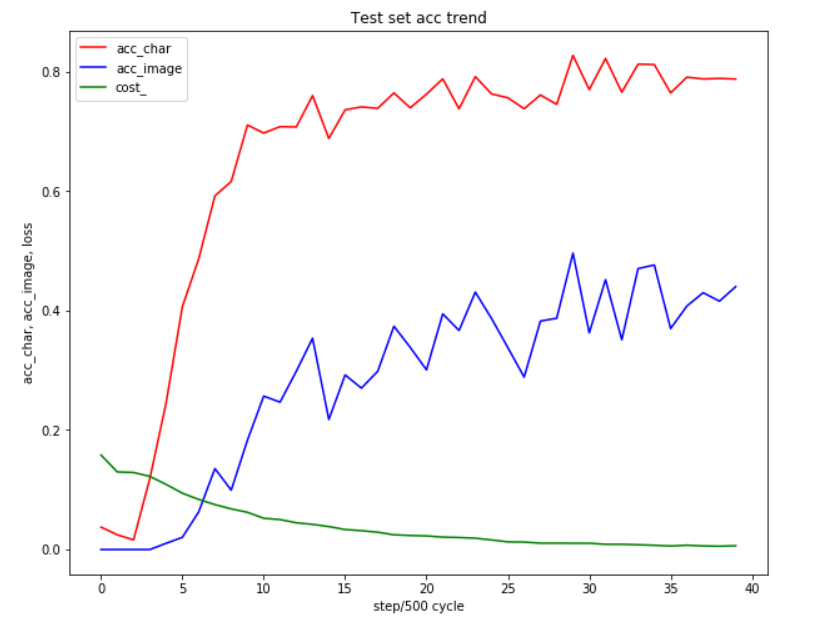

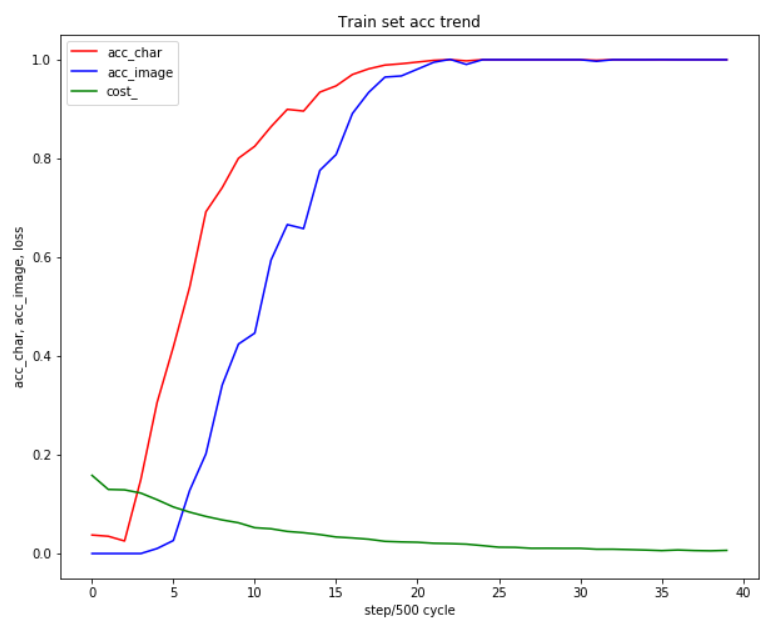

readme_image/test_acc.png

TEMPAT SAMPAH

readme_image/train_acc.png

TEMPAT SAMPAH

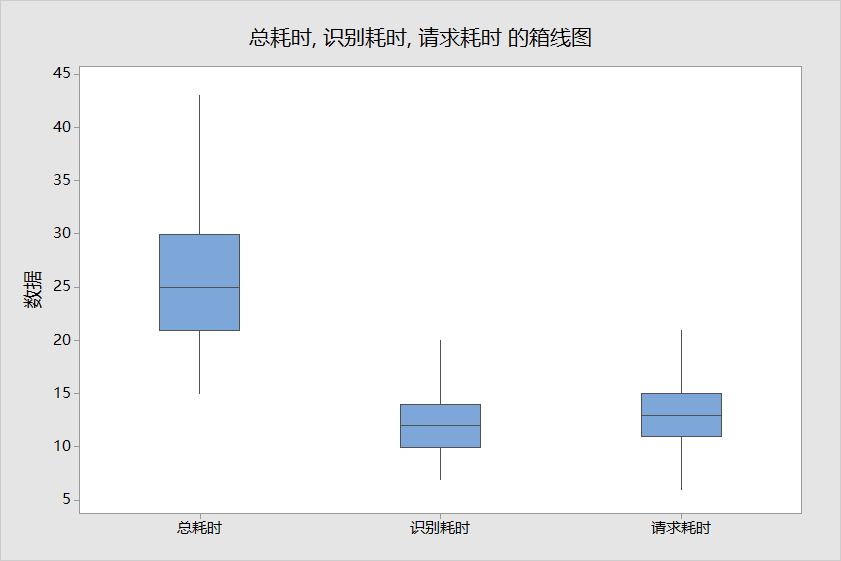

readme_image/压力测试结果.png

+ 76

- 0

recognize_local.py

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

+ 131

- 0

recognize_online.py

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

+ 78

- 0

recognize_time_test.py

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

+ 36

- 0

requirements.txt

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

+ 125

- 0

test_batch.py

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

+ 31

- 0

tools/collect_labels.py

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

+ 91

- 0

tools/correction_captcha.py

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

+ 19

- 0

tools/gen_md_content.py

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

+ 288

- 0

train_model.py

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

+ 160

- 0

verify_and_split_data.py

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

+ 75

- 0

webserver_captcha_image.py

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

+ 107

- 0

webserver_recognize_api.py

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||